Wipefs force full#

For large arrays, especially with brand new drives that you know aren't full of old files, there's no practical reason to do it the 'normal'/non-lazy way (at least, AFAICT). In this example, I used lazy initialization to avoid the (very) long process of initializing all the inodes. Writing superblocks and filesystem accounting information: done If you don't do this, the RAID array won't come up after a reboot. Persist the array configuration to nf $ sudo mdadm -detail -scan -verbose | sudo tee -a /etc/mdadm/nf You observe the progress of a rebuild (if choosing a level besides RAID 0, this will take some time) with watch cat /proc/mdstat. For other RAID levels, it may take a while to initially resync or do other operations. Certain levels require certain numbers of drives to work correctly! Verify the array is workingįor RAID 0, it should immediately show State : clean when running the command below. You can specify different RAID levels with the -level option above. Mdadm: Defaulting to version 1.2 metadata $ sudo mdadm -create -verbose /dev/md0 -level=0 -raid-devices=5 /dev/sd1

Wipefs force install#

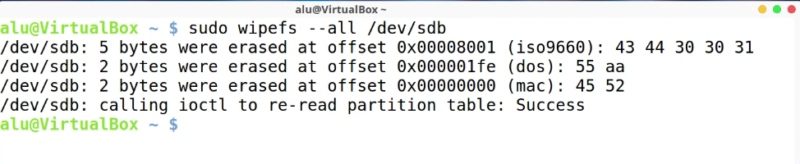

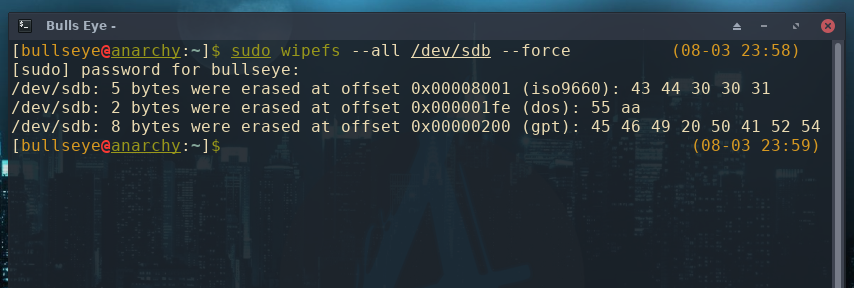

If you don't have mdadm installed, and you're on a Debian-like system, run sudo apt install -y mdadm. Verify there's now a partition for each drive: :~ $ lsblk WARNING: Entering the wrong commands here will wipe data on your precious drives. If it's not installed, and you're on a Debian-like distro, install it: sudo apt install -y gdisk. You could interactively do this with gdisk, but I like more automation, so I use sgdisk. Looking good, time to start building the array! Partition the disks with sgdisk It doesn't zero the data, so technically it could still be recovered at this point!Ĭheck to make sure nothing's mounted (and make sure you have removed any of the drives you'll use in the array from /etc/fstab if you had persistent mounts for them in there!): $ lsblk If you didn't realize it yet, this wipes everything. $ sudo umount /dev/sdb? sudo wipefs -all -force /dev/sdb? sudo wipefs -all -force /dev/sdbĭo that for each of the drives. We should make sure all the drives that will be part of the array are partition-free: $ sudo umount /dev/sda? sudo wipefs -all -force /dev/sda? sudo wipefs -all -force /dev/sda I noticed that sda already has a partition and a mount. I want to RAID together sda through sde (crazy, I know). List all the devices on your system: $ lsblk Note: Other guides, like this excellent one on the Unix StackExchange site, have a lot more detail. And make sure you don't care about the integrity of the data you're going to store on the RAID 0 volume.

And make sure you don't care about anything on them.

You should have at least two drives set up and ready to go. In the guide, I'll create a RAID 0 array, but other types can be created by specifying the proper -level in the mdadm create command. In it, I'm going to document how I create and mount a RAID array in Linux with mdadm.

Wipefs force series#

This is a simple guide, part of a series I'll call 'How-To Guide Without Ads'.

0 kommentar(er)

0 kommentar(er)